Beyond Words: New Metrics for AI in Healthcare Dialogue

Exploring new metrics to assess AI's impact in healthcare dialogues, and its lessons for media professionals

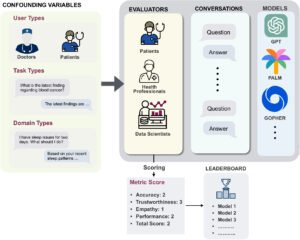

A recent paper titled ‘Foundation metrics for evaluating effectiveness of healthcare conversations powered by generative AI’ appeared in Nature’s ‘npj digital medicine’ journal. This fascinating paper focuses on the use of this technology in patient interaction and proposes a collection of new metrics to improve the relevance of measured performance to patients and healthcare professionals, with solli shining a media specific light on the learning & opportunities therein.

Context and Significance of AI in Healthcare

Generative Artificial Intelligence (AI) has transformative potential in healthcare, shifting the paradigm from traditional reactive models to more proactive and personalized patient care. Chatbots, as interactive conversational models, are at the forefront of this change, expected to enhance patient engagement, reduce provider workload, and improve health outcomes by delivering tailored diagnostic and therapeutic recommendations. Similar technologies are making their way into marketing & media within Pharma, and we explore how this paper guides stakeholders in the technology’s usage.

This paper is particularly focused on the use of Chatbots in healthcare contexts and challenges the value of using only the common linguistically focused measurements at the expense of measures reflecting real health-care value. Specifically, it takes issue with the poor performance of traditional grammatical likeness measures in recognizing obviously similar medical texts and suggests that a more medically aware approach is required to capture these important details.

Without these details, the AI is likely to miss crucial important details on which any advice it gives might be based.

Evaluation of Healthcare AI

The existing evaluation frameworks for generative AI, particularly in healthcare, are predominantly adapted from broader AI applications. These frameworks often emphasize computational and linguistic capabilities without adequately addressing the specific needs of healthcare interactions, such as medical accuracy, patient safety, and compliance with healthcare regulations.

One particularly relevant example provided is the poor performance in recognizing the similarity between the two example texts: “Regular exercise and a balanced diet are important for maintaining good cardiovascular health.” and “Engaging in regular physical activity and adopting a well-balanced diet is crucial for promoting optimal cardiovascular well-being.” An AI that cannot recognise these two expressions as being similar will likely struggle at recognising the jargon used every day in the medical profession, and also perform poorly at tasks related to de-mystifying medical jargon for patients.

A broad overview of the evaluation process and the role of metrics. (Link here)

Limitations of Current Metrics

Current metrics used to evaluate healthcare AI applications often lack depth in several critical areas:

- Medical Knowledge Comprehension: Many models are evaluated on their linguistic prowess without thorough validation of their medical knowledge, which is vital for ensuring the accuracy of health-related recommendations.

- User-Centric Factors: There is insufficient emphasis on factors like empathy, trust-building, and personalization, which are crucial for patient-centered care.

- Ethical Considerations: The metrics rarely consider the ethical implications of AI interactions, such as biases and privacy concerns, which can significantly impact patient trust and the ethical standing of AI applications.

Proposed Comprehensive Evaluation Metrics

To address these shortcomings, the paper proposes a detailed set of metrics tailored for healthcare AI applications, focusing on three main areas:

- Language Processing Abilities: Metrics to assess the AI’s capability to process and understand medical terminology and context accurately.

- Clinical Task Impact: Metrics to evaluate how effectively the AI supports real-world clinical tasks, such as patient triage, symptom analysis, and ongoing patient management.

- User Interaction Quality: Metrics aimed at evaluating the quality of interactions between the AI and its users, focusing on the model’s ability to provide empathetic responses, maintain patient privacy, and personalize interactions based on individual patient data.

Challenges in Implementing New Metrics

The implementation of these new metrics faces several challenges:

- Complexity of Healthcare Dialogues: The diverse and complex nature of medical dialogues requires AI models to handle a wide range of topics and adapt to various conversational contexts. Differences in the tone and jargon levels of patient and HCP within any given conversation further complicate this point.

- Sensitivity of Medical Information: Evaluating AI applications in healthcare demands rigorous standards to ensure sensitive patient information is handled securely and ethically. The relevant privacy concerns were not significantly touched on during the paper, though these are also clearly a challenge.

- Standardization Across Systems: There is a need for standardized evaluation methods that can be universally applied across different AI systems and healthcare settings. The discussion of who would coordinate and enforce these standards is a natural follow-on discussion from that in the paper.

Framework for Evaluation

The paper recommends the development of a cooperative and standardized evaluation framework that encompasses these metrics. This framework should facilitate consistent assessment practices across the industry and ensure that AI tools are both effective in their intended applications and adhere to ethical standards.

How can this relate to media within Pharma?

Enhancing Patient Engagement

The insights from evaluating healthcare AI can be leveraged in paid media marketing to enhance patient engagement strategies. By understanding how AI tools are assessed for user interaction quality, marketers can better tailor their campaigns to meet the informational needs and preferences of specific patient groups, leading to more effective and personalized advertising.

Optimizing Content Delivery

Metrics related to the AI’s language processing abilities and clinical task impact can inform the content creation process in marketing. This allows for the development of content that is not only medically accurate but also contextually relevant to the target audience, thereby increasing the effectiveness of paid media campaigns in promoting pharmaceutical products and services.

Building Trust and Compliance

The ethical considerations and trust-building metrics used in AI evaluation can guide the development of marketing strategies that prioritize transparency and patient safety. This is particularly important in pharma, where building trust is crucial due to the sensitive nature of health-related information. Marketing campaigns can use these metrics to highlight the commitment of pharmaceutical companies to ethical practices and patient-centric care.

Targeted Advertising and Personalization

The learnings from AI evaluation in healthcare can enhance targeted advertising efforts in the pharmaceutical industry. By using AI to analyze user data and predict patient needs, marketers can more effectively allocate advertising resources to reach the most relevant audiences. Additionally, personalization metrics can help in crafting messages that resonate on a personal level with patients, improving engagement and conversion rates.

Conclusion

The application of AI in healthcare and its evaluation based on comprehensive, user-centered metrics provides valuable insights that can revolutionize both medical practice and pharmaceutical media.

By adopting these metrics, marketers can ensure that their strategies not only comply with medical standards but also meet the evolving expectations of patients in a digital age. This strategic alignment will likely result in more effective marketing, improved patient outcomes, and greater trust in pharmaceutical brands.

This piece was authored as a collaboration between solli editorial and Rick Knowles. Rick is a seasoned technology leader with expertise in the pharmaceutical and media industries. Previously serving as CTO for M3 and Cognitant, he now leverages AI and machine learning innovations at Hikari Systems. Rick is deeply passionate about the synergy between healthcare and technology, driving advancements in both fields.